- Sloth Bytes

- Posts

- 🦥 Data Cleaning For Dummies

🦥 Data Cleaning For Dummies

Hello friends!

Welcome to this week’s Sloth Bytes. I hope you had a great week!

Want to get the most out of ChatGPT?

ChatGPT is a superpower if you know how to use it correctly.

Discover how HubSpot's guide to AI can elevate both your productivity and creativity to get more things done.

Learn to automate tasks, enhance decision-making, and foster innovation with the power of AI.

Data Cleaning For Dummies

When I was studying machine learning in college, I unfortunately learned a hard truth:

You spend more time with data instead of building cool AIs.

I remember when the course first started and we were learning regression.

I had to use a dataset that the professor provided.

I was so excited to build my first ML model and the dataset we were given made sense, but then I took a closer look at the data:

“NaN”, ““, outliers, typos, and zeroes where there shouldn’t be.

That’s when I learned I had to clean my data.

I also learned the truth about data science and machine learning.

Instead of building cool AIs, you’re just the janitor of tech.

Cleaning up weird broken data….

Real Data Is Always Messy

Perfect datasets only exist in tutorials. Real data comes from:

Human input: Typos, skipped fields, jokes, skepticism

System errors: Sensor failures, database crashes

Integration issues: Different systems, different formats

Time: Requirements change, fields get repurposed

If your data looks perfect, you're not looking hard enough or someone already did the hard work.

Data Exploration First

Before cleaning, understand what you're working with:

print(df.info()) # Data types and missing values

print(df.describe()) # Statistical summary

print(df.nunique()) # Unique values per column

# Visualize missing data

import seaborn as sns

sns.heatmap(df.isnull(), cbar=True)

Alright you’ve explored the data and you definitely see some problems.

What do you do?

Some Common Data Problems

Missing Values

# What you expect

age: [25, 30, 35, 40]

# What you get

age: [25, NaN, 35, None, "", "N/A", -999, 0]

# HOW DID THIS HAPPEN????

Inconsistent Formats

# Date chaos

dates: ["2023-01-15", "01/15/2023", "Jan 15, 2023", "15-01-23"]

# Category madness

categories: ["Red", "red", "RED", "R", "crimson", "Red "]

Outliers and Impossible Values

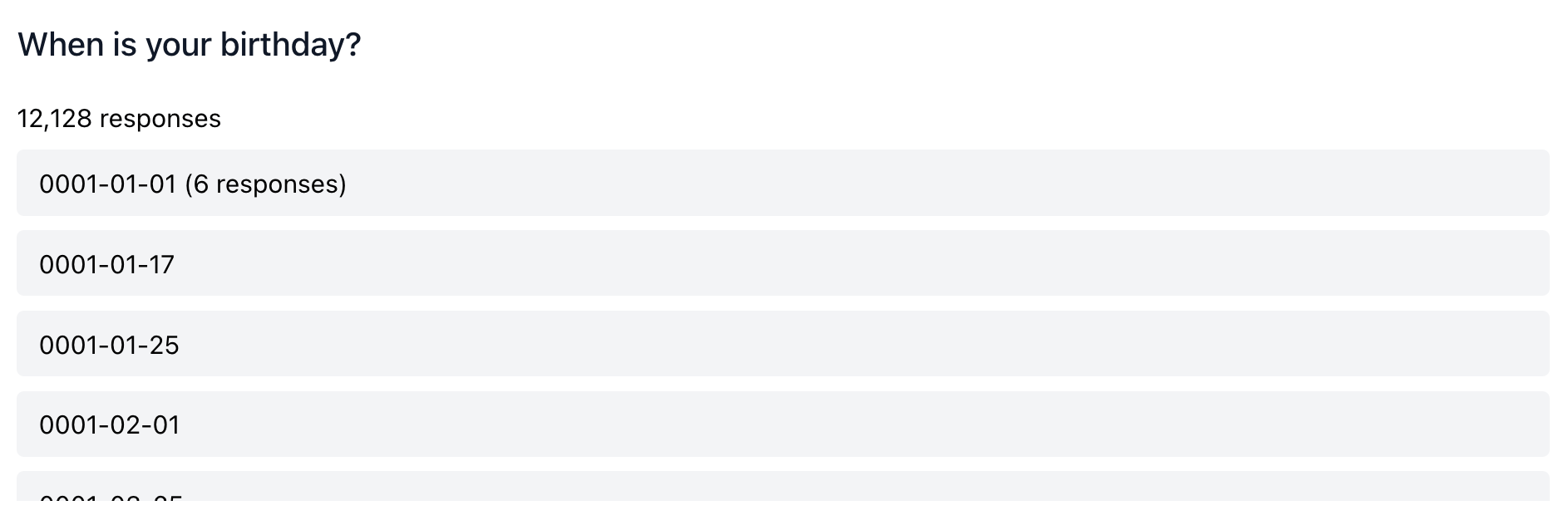

These are REAL values from my survey. (Thanks…)

# Birthday values

birthdays: ["0001-01-17", "275760-08-06", "9999-09-09", "0006-09-24"]

# Ah yes, I love that I have readers born in the year 1 and the year 275760..

Here’s proof btw:

Data Cleaning Strategies

1. Handling Missing Values

Deleting the data (The easy way out):

df_clean = df.dropna() # Simple but might lose 80% of the datasetFill with default values:

Another option is to try and fill in the missing values with some type of data.

df['age'].fillna(df['age'].median(), inplace=True)

# Numbers: use median

df['category'].fillna('Unknown', inplace=True)

# Text: use a placeholder/default valuePredict missing values:

You can try to predict the values using something like the data’s mean, median, etc

import numpy as np

from sklearn.impute import SimpleImputer

imp_mean = SimpleImputer(missing_values=np.nan, strategy='mean')

#Fill all missing values with the data's mean.

df_filled = imputer.fit_transform(df)

2. Inconsistent Formats

For these data issues, you usually standardize them.

Basically turning them into a proper format.

# 1. Clean any capitalization inconsistencies

df['color'] = df['color'].str.lower().str.strip()

# 2. Mapping variations

color_mapping = {'r': 'red', 'crimson': 'red'}

df['color'] = df['color'].map(color_mapping).fillna(df['color'])

# 3. Typos with fuzzy matching (very simple example)

#Yes this package is real

from thefuzz import process

countries = df['country'].unique()

matches = process.extract("US", countries, limit=10)

#Pretend this exists

fix_typos(df["country"], matches)3. Dealing with Outliers

Once again, use something like median, mean, absolute value, etc.

Domain knowledge: Maybe you know a lot about this dataset and you can make some “educated guesses.”

This one is pretty risky, but hey if you think you’re smart I won’t stop you.

It’s not my problem.

# I think it's reasonable to say there's nobody who has a negative age or is over 3000 years old...

df_clean = df[(df['age'] >= 0) & (df['age'] <= 3000)]

# Maybe I'm wrong?When to Stop Cleaning

Perfect data doesn't exist. Know when "good enough" is actually good enough:

Your model can handle some messiness

Errors don't affect your target variable

You're spending more time cleaning than modeling

Data cleaning isn't glamorous, but it's the foundation of everything else. A mediocre model with clean data beats a sophisticated model with messy data.

Your ML model will only be as good as the data you feed it.

Garbage in, garbage out.

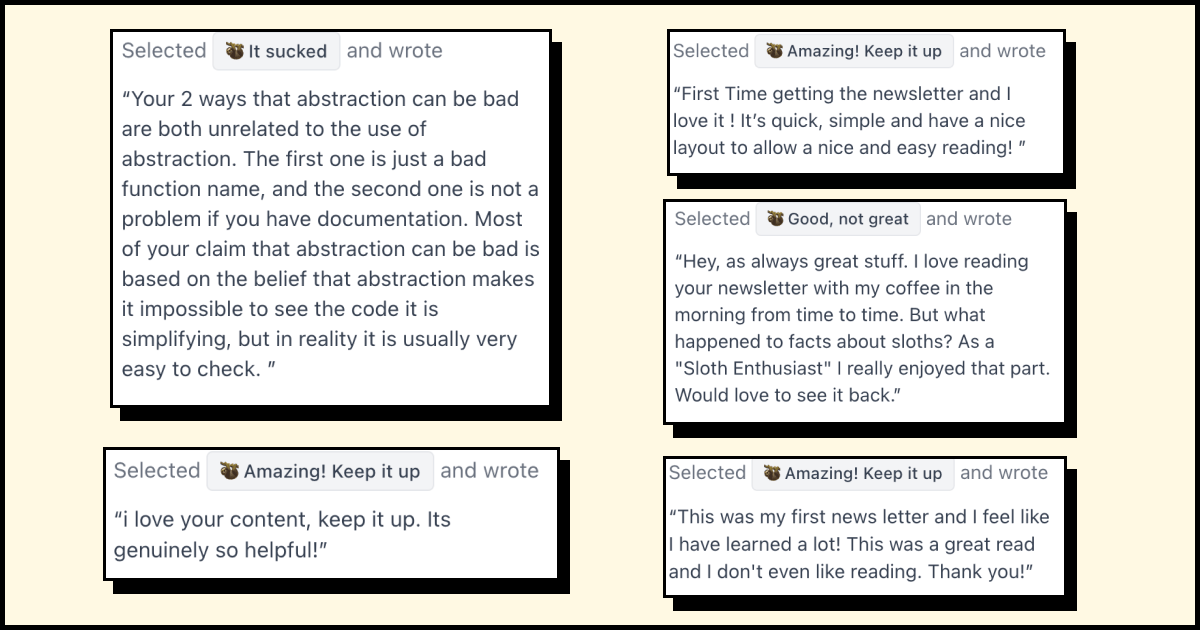

Thanks for the amazing feedback! I’ll do better. As for the sloth facts, I ran out of them 😭

Thanks to everyone who submitted!

NeoScripter (PHP solution, respect), AspenTheRoyal, gcavelier, SauravChandra10, and RelyingEarth87.

Word Overlapping

Given two words, overlap them in such a way, morphing the last few letters of the first word with the first few letters of the second word.

Return the shortest overlapped word possible.

Examples

overlap("sweden", "denmark")

output = "swedenmark"

overlap("honey", "milk")

output = "honeymilk"

overlap("dodge", "dodge") "dodge"Notes

All words will be given in lowercase.

If no overlap is possible, return both words one after the other (example #3).

How To Submit Answers

Reply with

A link to your solution (github, twitter, personal blog, portfolio, replit, etc)

or if you’re on the web version leave a comment!

If you want to be mentioned here, I’d prefer if you sent a GitHub link or Replit!

That’s all from me!

Have a great week, be safe, make good choices, and have fun coding.

If I made a mistake or you have any questions, feel free to comment below or reply to the email!

See you all next week.

What'd you think of today's email? |

Want to advertise in Sloth Bytes?

If your company is interested in reaching an audience of developers and programming enthusiasts, you may want to advertise with us here.

Reply